Playlists web crawler

This project is a massive web crawler. The problem to solve was to automatize a manual task that wastes a many man/hours every week. Every week an online music broker publishes a lot of new releases to the music platforms (Spotify, Tidal, Claro Musica, You Tube Music, Amazon Music and many many more). The task is to open every playlist of interest and look if any of the releases are present, and in which position. After looking in hundreds of playlists you make a report in google sheets, and create a set of reports for each label manager. The report is constrained to one Record Label and shows the placements of the week.

To initiate the process, the user needs to save a report from the BI Tool Looker into a WebHook. This triggers the crawler who spawns browsers that load the playlists the same way that an anonymous user does.

The playlists to explore, are loaded in the same google sheet, so it’s very easy to add or replace playlists. The recommended use is to have a separate sheet for every region. (Mexican music for example have a small market in Japan).

After the crawling is finished, a new tab is created in the same google sheet that was used to get the playlists. The name of the tab is the date of the report.

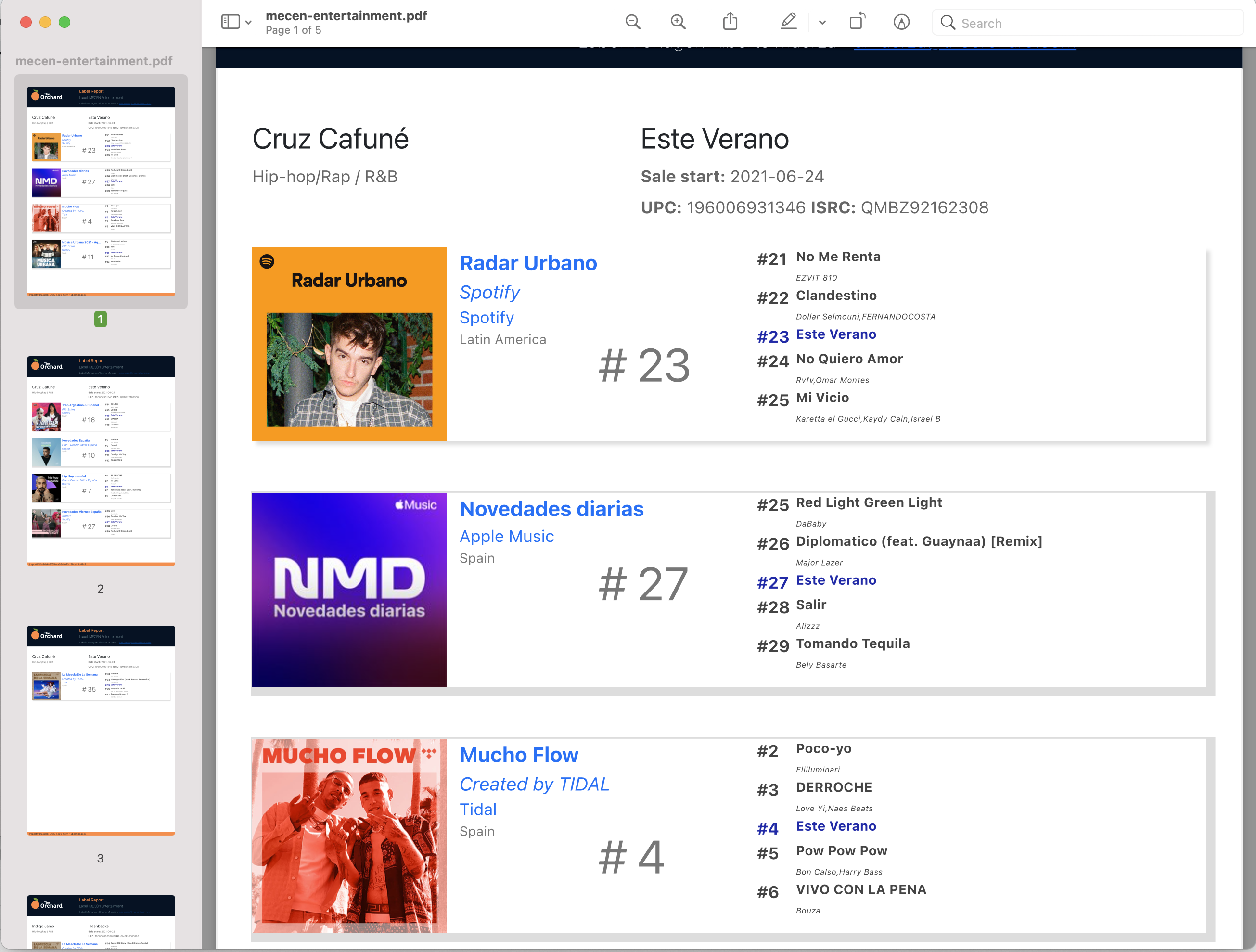

When this is done, another process takes control and creates many PDFs with a Label Report that are sent to the Label Manager. This reports are used to discuss marketing strategies with the labels.

The web pages are cached 24 hours to speed up the process in a Redis Server. All the code is written in NodeJS.

All the results are saved to a MySQL to be used in regression reports in the future. Also MySQL controls the users access.

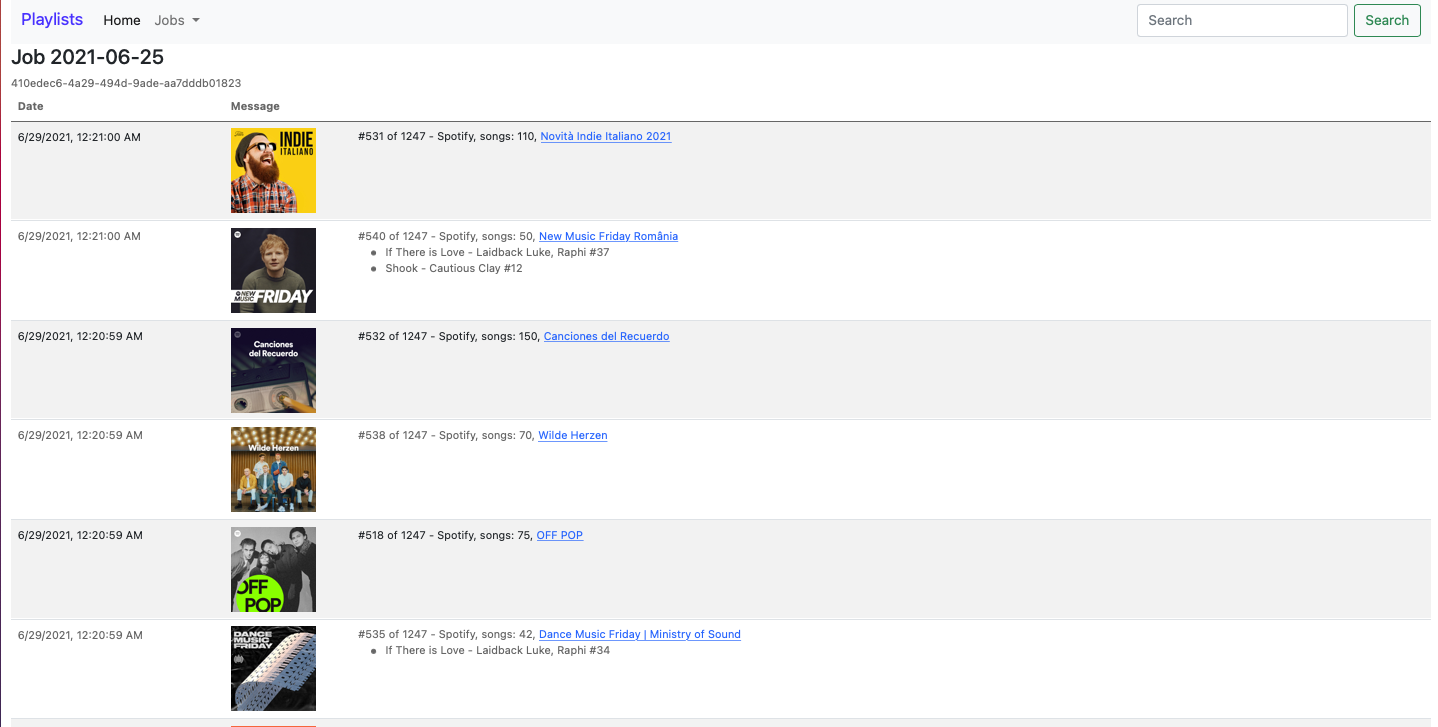

The end user can see whats going on by opening a web site, after authentication, shows the progress of each job. The web page responds in real time using web sockets. You don’t need to reload the page to refresh the data.